It’s not too late to regulate persuasive technologies

Social media companies such as TikTok have already revolutionised the use of technologies that maximise user engagement. At the heart of TikTok’s success are a predictive algorithm and other extremely addictive design features—or what we call ‘persuasive technologies’.

But TikTok is only the tip of the iceberg.

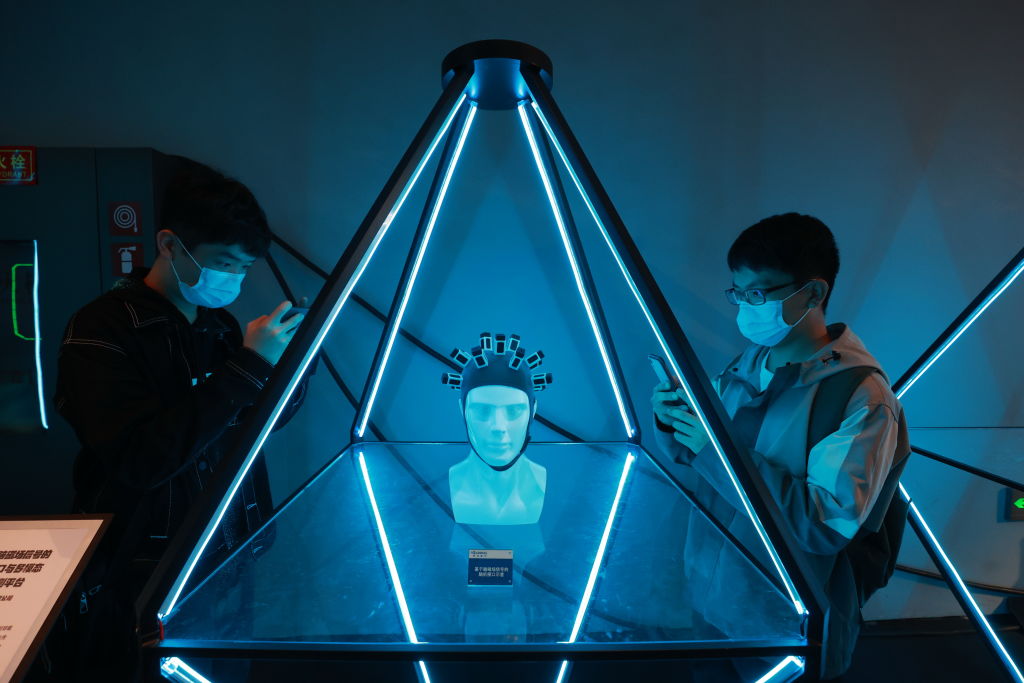

Prominent Chinese tech companies are developing and deploying powerful persuasive tools to work for the Chinese Communist Party’s propaganda, military and public security services—and many of them have already become global leaders in their fields. The persuasive technologies they use are digital systems that shape users’ attitudes and behaviours by exploiting physiological and cognitive reactions or vulnerabilities, such as generative artificial intelligence, neurotechnology and ambient technologies.

The fields include generative artificial intelligence, wearable devices and brain-computer interfaces. The rapidly advancing tech industry to which these Chinese companies belong is embedded in a political system and ideology that compels companies to align with CCP objectives, driving the creation and use of persuasive technologies for political purposes—at home and abroad.

This means China is developing cutting-edge innovations while directing their use towards maintaining regime stability at home, reshaping the international order abroad, challenging democratic values, and undermining global human rights norms. As we argue in our new report, ‘Persuasive technologies in China: Implications for the future of national security’, many countries and companies are working to harness the power of emerging technologies with persuasive characteristics, but China and its technology companies pose a unique and concerning challenge.

Regulation is struggling to keep pace with these developments—and we need to act quickly to protect ourselves and our societies. Over the past decade, the swift technological development and adoption have outpaced responses by liberal democracies, highlighting the urgent need for more proactive approaches that prioritise privacy and user autonomy. This means protecting and enhancing the ability of users to make conscious and informed decisions about how they are interacting with technology and for what purpose.

When the use of TikTok started spreading like wildfire, it took many observers by surprise. Until then, most had assumed that to have a successful model for social media algorithms, you needed a free internet to gather the diverse data set needed to train the model. It was difficult to fathom how a platform modelled after its Chinese twin, Douyin, developed under some of the world’s toughest information restrictions, censorship and tech regulations, could become one of the world’s most popular apps.

Few people had considered the national security implications of social media before its use became ubiquitous. In many countries, the regulations that followed are still inadequate, in part because of the lag between the technology and the legislative response. These regulations don’t fully address the broader societal issues caused by current technologies, which are numerous and complex. Further, they fail to appropriately tackle the national security challenges of emerging technologies developed and controlled by authoritarian regimes. Persuasive technologies will make these overlapping challenges increasingly complex.

The companies highlighted in the report provide some examples of how persuasive technologies are already being used towards national goals—developing generative AI tools that can enhance the government’s control over public opinion; creating neurotechnology that detects, interprets and responds to human emotions in real time; and collaborating with CCP organs on military-civil fusion projects.

Most of our case studies focus on domestic uses directed primarily at surveillance and manipulation of public opinion, as well as enhancing China’s tech dual-use capabilities. But these offer glimpses of how Chinese tech companies and the party-state might deploy persuasive technologies offshore in the future, and increasingly in support of an agenda that seeks to reshape the world in ways that better fit its national interests.

With persuasive technologies, influence is achieved through a more direct connection with intimate physiological and emotional reactions compared to previous technologies. This poses the threat that humans’ choices about their actions are either steered or removed entirely without their full awareness. Such technologies won’t just shape what we do; they have the potential to influence who we are.

As with social media, the ethical application of persuasive technologies largely depends on the intent of those designing, building, deploying and ultimately controlling the technology. They have positive uses when they align with users’ interests and enable people to make decisions autonomously. But if applied unethically, these technologies can be highly damaging. Unintentional impacts are bad enough, but when deployed deliberately by a hostile foreign state, they could be so much worse.

The national security implications of technologies that are designed to drive users towards certain behaviours are already becoming clear. In the future, persuasive technologies will become even more sophisticated and pervasive, with the consequences increasingly difficult to predict. Accordingly, the policy recommendations set out in our report focus on preparing for, and countering, the potential malicious use of the next generation of persuasive technologies.

Emerging persuasive technologies will challenge national security in ways that are difficult to forecast, but we can already see enough indicators to prompt us to take a stronger regulatory stance.

We still have time to regulate these technologies, but that time for both governments and industry are running out. We must act now.