Nothing Found

Sorry, no posts matched your criteria

Sorry, no posts matched your criteria

On the whole, the internet has been a tremendous boon for society, but it has also exposed all of Australia—our people, our economy and our government—to sources of unexpected danger from across the entire planet. Criminals, crooks and scammers in other countries can now reach out and hurt us by stealing our data, our identities and our money, and by disrupting our businesses. And although these crimes can be perpetrated in cyberspace, our justice system is historically designed for the physical world and these criminals are usually beyond the reach of our laws.

When it comes to national security, cyber operations are now one of the main ways that states engage in strategic competition to gain advantage without warfare.

The entirety of online Australia is subject to attack, but the sad truth is that only a minority of Australian people and organisations are able to defend themselves. According to the latest official figures, a new cybercrime is reported to Australian authorities every 10 minutes—a staggering statistic given that perhaps less than a third of these crimes are actually reported.

Even large businesses are not immune from online dangers. This year alone BlueScope Steel, transport company Toll Holdings and brewer Lion Australia have had their operations interrupted by ransomware. If large operations are not immune, how are the 98% of Australian businesses with fewer than 20 employees to cope?

An ASPI International Cyber Policy Centre report released today examines ‘Clean Pipes’, the concept that internet service providers (ISPs) offer enhanced levels of default security to their customers.

Some of the most effective security interventions in recent decades have involved providing ‘invisible’ security—security that is delivered by default to end users without requiring any skills or work on their part. These default protections have occurred at many different layers in our computing and communication infrastructure.

For example, one of the ways that operating systems manufacturers such as Microsoft and Apple have made their products more secure is through automatic updates that allow security improvements to be distributed without requiring any user intervention. Building on top of these operating system improvements, browser manufacturers have built systems (Google’s Safe Browsing and Microsoft’s Defender SmartScreen, for example) that warn users before they head to dangerous sites. These initiatives aren’t perfect, but Google’s transparency report states that its Safe Browsing service issues five to 10 million warnings a week to users.

Our ISPs are well placed to implement similar initiatives that improve the security of millions of Australians without their needing to be cybersecurity experts. Conceptually, this requires that ISPs positively identify threats, have some ability to proactively deal with them (such as warning users, blocking attack traffic or removing bogus traffic), and be able to adjust their responses dynamically as the environment changes.

ISPs already have some of these systems in place to protect their own networks and, to a greater or lesser extent, already use that capability to protect their customers. So this is not a case of building an entirely new system to protect Australians. Until now there’s been no widespread belief—among either ISPs or their customers—that providing enhanced default security to customers was an ISP’s job, and nor has any obligation or regulation been imposed by government. In the absence of any expectation or obligation, the investments needed to provide a more secure service haven’t been made.

This hands-off approach to security may have been appropriate for the early days of cyberspace, but as the internet has become increasingly important and the consequences of online crime and interference have become more dire we need more robust protections. The Australian government should drive greatly expanded adoption of Clean Pipes to provide more effective protection across more ISPs—protecting more Australians more effectively. The key advantage of this approach is that it provides advanced scalable protection for the millions of Australians who cannot provide for their own online security.

Recently announced government funding of over $35 million to develop a ‘new cyber threat-sharing platform’ and over $12 million towards ‘strategic mitigations and active disruption options’ could certainly assist in the implementation of a Clean Pipes program. Without an injection of government funds and leadership, it’s likely that the status quo will continue.

Australian governments don’t have a stellar track record of explaining their internet initiatives to the public. To avoid Clean Pipes being mired in unnecessary controversy, government actions and communications should maintain a clear focus on protecting users, and keep copyright enforcement and removal of abhorrent material to their own separate mechanisms.

Clean Pipes is an idea whose time has come. Everyone involved in delivering services on the internet needs to accept an obligation to protect their users.

On 14 May, the Australian parliament passed legislation setting out the framework for the collection and use of data from the COVIDSafe contact-tracing app. The law amended the Privacy Act 1988 ‘to support the COVIDSafe app and provide strong ongoing privacy protections’.

Much focus has rightly fallen on who can access the data gathered by the app, and for what purpose; however, little attention has been given to a provision that permits the collection and analysis of de-identified data for statistical purposes.

This provision is not, of itself, troubling. De-identification or anonymisation is the process of stripping out characteristics that can identify the people who provided the data. For data analysts, it’s a routine way to use large datasets to uncover trends and insights while protecting the privacy of individuals .

But de-identification, while laudable in principle, suffers from a technical problem—it is possible to re-identify, or de-anonymise, the individuals.

This isn’t a far-fetched or theoretical event. A notable example was the revelation by University of Melbourne researchers in 2017 that confidential, de-identified federal patient data could be re-identified without the use of decryption. In another study, the same researchers were able to re-identify users of Victoria’s public transport system, demonstrating that only two data points were needed to re-identify individuals from large datasets.

The problem of re-identification is likely to be made worse by advances in artificial intelligence. In a recent UK report on AI, Olivier Thereaux of the Open Data Institute noted that even with ‘pretty good’ de-identification methods, AI systems can re-identify data by cross-referencing against other datasets.

So, there are trade-offs. The more effective a technology is at drawing out insights from data, the more likely it is that individuals’ privacy will be undermined. And the more we attempt to strip out information to protect privacy, the less useful the dataset becomes for legitimate research.

This is not inherently problematic, or new. UK Information Commissioner Elizabeth Denham says in the same report that there’s ‘no such thing as perfect anonymisation; there is anonymisation that is sufficient’. Her comments are in the context of the UK Data Protection Act, which criminalises illegitimate dataset re-identification.

If Australia is serious about protecting data collected by government departments, agencies and other organisations to which the Privacy Act applies, it should follow the UK example.

The government has considered this issue before. In response to the 2017 incident with the patient dataset, the Coalition introduced a bill to criminalise illegitimate re-identification of datasets. However, the bill died in review because of concerns that it would have a chilling effect on cybersecurity research.

Those concerns are legitimate. Researchers shouldn’t feel under pressure to stop calling out poor privacy practices. It’s in the public interest for organisations to improve how they collect and publish datasets.

But the need to enable legitimate cybersecurity research is also not a difficult obstacle to overcome. An exception could be made in the act for public-interest research by bodies such as universities, think-tanks and NGOs. That could be done in one of two ways.

Researchers could be given the right to apply for the equivalent of a licence to re-identify government datasets. Something similar has been done under the Defence Trade and Controls Act, which includes a requirement for researchers to get a licence if they want to collaborate internationally on certain technologies.

Such an approach would ensure that anyone seeking to re-identify data is thoroughly checked. But it’s also overkill. Australia’s original data privacy bill called for licensing or obtaining ministerial permission for such work, but it was criticised for failing to provide a clear exemption for public-interest research.

The other way is to write a defence or an exception to liability into the law that would place the legal burden of proof on the state. Licensing would require researchers to demonstrate that their work is in the public interest.

That approach has the benefit of providing a clear exemption for researchers, and it’s unlikely that prosecutors will be able to prove that university research isn’t in the public interest.

Britain took that approach in its Data Protection Act, which includes a public-interest defence to criminal liability.

Of course, neither approach blocks the ability to re-identify datasets, any more than criminalising murder prevents people from murdering. But it would be an effective deterrent and moral indicator of unacceptable data practices.

Moreover, such a provision would demonstrate the government’s commitment to protecting privacy. This is especially important now as the government tries to persuade 10 million of us to download a tracing app.

The concerns raised by the COVIDSafe app suggest that Australians care a lot about privacy, at least when information to be held by the government is involved. Facebook’s data-collection policies don’t appear to cause nearly as much concern. Let’s turn that passion into action, starting with bolstering the privacy protections on large datasets.

This article is part of a series on women, peace and security that The Strategist is publishing in recognition of International Women’s Day.

In an era in which disruption rules, suggesting we should say no to some innovation could be seen as revolutionary. Because there’s good innovation, there’s bad innovation, and there’s just plain evil innovation. Not all change is progress.

But the values that determine what is good, bad and evil are not bound by time and should remain eternal. They are the lights that guide us on an uncharted path, as the stars guided navigators at sea for thousands of years. If they hadn’t been there—and constant—many more adventurous innovators would have foundered on the rocks.

So, in our quest for knowledge, we need to take the time to pause and think about the kind of world we want as we innovate. That means we have to consider the unintended consequences and alternative applications.

And we must ensure women are at the table shaping our technology-dependent future.

Because a tracking device in a key ring can save us from ourselves. But it can also allow a control-freak boyfriend to monitor his girlfriend’s every move.

A doll with a camera can delight your child with its novelty. But it can spy on an abused woman fleeing domestic violence by her estranged partner.

A centralised locking system can secure your home remotely. But it can also imprison you.

In its Innovation for gender equality report, UN Women acknowledged that good innovation can be a ‘driver for change’ by accelerating access to opportunities for women across the globe.

But ‘it is increasingly clear that technology and innovation can be rejected; that they can create new, unforeseen problems of their own; and that they do not benefit all equally. Not only are women under-represented across core innovation sectors, including science, technology, engineering and mathematics, but new technology brings risks of bias and possibilities for misuse, creating new human rights challenges for the 21st century.’

Fortunately, the under-representation of women in science, technology, engineering and mathematics and the impact that has on national security, productivity, innovation, research and the economy have been acknowledged by governments, industry and academia.

In Australia, programs now target attracting, retaining and progressing girls and women studying and working in STEM. Peak associations, mentoring groups and ambassadors encourage, support and provide role models for girls and women. Schools and universities offer coding subjects and run hacking competitions.

From a cybersecurity perspective, this is all good work that must continue apace, particularly given the massive and well-documented international and national skills shortage—some say crisis—in the sector.

But the challenges of cyberspace are as much, if not more, human than technical.

While technical knowledge will always be important, it will be ‘institutional, cultural and social changes that will be most effective in mitigating cyber insecurity. New ways of thinking, new understanding and new strategies to the emerging digital age realities will be vital.’ This will require a workforce with political, social, legal, people and technical skills.

So, to fill the enormous and immediate demand for these skills, we need to think and recruit creatively and laterally so that we harness the full potential of our talent pool and bolster diversity in a dynamic and fast-changing industry.

This seems to be underway.

In a survey of more than 300 women in cybersecurity, less than half entered the field through information technology or computer science, coming instead from compliance, psychology, internal audit, entrepreneurship, sales, arts and other backgrounds.

Because of new thinking, understanding and strategies, the dial appears to be shifting on the number of women in cybersecurity, since the alarm was raised when it was found just 11% of the sector was female.

Women now make up 24% of the sector in North America, Latin America and the Asia–Pacific—thanks in part to a new, more inclusive definition of the cybersecurity workforce—and 25% in Australia.

Women now head Australia’s signals intelligence, cybersecurity, cyber research and cybersecurity growth network agencies, to name just some of the female leaders in the sector.

Women have made real gains in cybersecurity in recent years. These wins are important, and they should be celebrated. But we need to keep the pedal firmly to the metal.

We need to continue to address pay gap, systemic bias, recruitment pool, workplace culture, role definition and work–life balance issues. And we need to continue to invest our energies in attracting, retaining and progressing women in STEM and the technical and non-technical fields of cybersecurity.

This is not just an economic and national security imperative. It’s a human rights imperative—to ensure women are liberated by the innovation and technology of the future, not enslaved by it.

Making judgements about the state of cybersecurity isn’t easy. Much depends on the metrics we use to measure success and failure, where it’s all too easy to fall into the trap of doing things right rather than doing the right thing.

Asking ourselves whether we’re doing things right merely asks us to measure our progress down a prescribed path. Judgements about whether we’re doing the right things are harder to make. It’s entirely possible we’re not even on the right path, regardless of how far along it we’ve come. A word of warning: this is a fairly dense and difficult topic.

Cybersecurity—at its core—is cloaked in both technical and operational obscurantism. Operationally, an important chunk of what we might think of as cybersecurity’s bandwidth is about countering subversion, espionage and sabotage—all activities where the defender must be just as adept as the attacker in the black arts of disinformation, hiding and diversion. Trying to measure whose artistry is blacker, when both sides are doing all they can to conceal their true capabilities, is a fool’s errand.

We might consult those in the actual business of cybersecurity, of course, to ask whether things are getting better or worse. But the business model of many in the industry, and even messaging by decision-makers, typically feed on fear, uncertainty and doubt.

Alternatively, we could interrogate manufacturers to examine whether enhanced security features are now the default attributes of their products. The answers would be mixed. Many newer applications originate in civilian offices and research facilities a long way from government. They may be found, often without differentiation, in the civilian, national security and military worlds.

Another option is to make some judgements about success and our own situation based on the health of that which we are seeking to protect. Let’s do that.

First, let’s look at the current apparent trends in the environment. There lies perhaps the strongest case that we are failing. The Australian Department of Home Affairs discussion paper makes the case that the environment is increasingly threatening and that the costs of mitigating those threats are increasing—as do report after report from industry.

Plus, there’s an increasingly threatening geopolitical environment. Advanced persistent threat actors, driven by geopolitical interests, are progressively more brazen, targeting national security, commercial and personnel systems and data across a wide range of sectors, regions and activities. There are deepening concerns over supply chains, ‘insider threats’ and the theft of intellectual property developed in Western universities, research institutions and companies.

Non-state actors are proving not merely resistant but more adept at exploiting the internet than nation-states. Child exploitation is increasing. Successes against groups such as Islamic State may prove ephemeral as they adapt. Ransomware is a tool of choice of criminal groups and pariah states, and is now directed at a wide range of industry sectors, from small and medium-sized businesses to local and state governments. Once code is in the wild, anyone can use it, broadening the number of possible attackers and deepening their arsenals.

It’s not pretty and it’s fair to conclude that we are worse off.

It’s hard to assess expenditure on cybersecurity—after all, it’s often rolled into other programs or comprises a series of disjointed activities. Australia spent $238 million over four years on its 2016 cybersecurity strategy, with another $300–400 million allocated to Defence over 10 years.

The UK government assigned £1.9 billion ($3.7 billion) to its 2016–21 cybersecurity strategy, while the US government is seeking US$17.4 billion ($25.6 billion) for cybersecurity in 2020 alone. As the UK public accounts committee also noted, it’s often hard to see the value of such expenditure.

Cybersecurity must contend with threats and vulnerabilities that are often unknown, uncertain and exhibit non-linear behaviours. They may lie dormant for months, even years, or be constant and escalating. They may arise either from external actors or from internal issues that may be deeply technical (in code, logic or architecture) or simply social (practice, process, cultural).

Cybersecurity also feeds on technical debt, particularly the type that represents failure to maintain and update systems and ensure adequate support over time. Funding mechanisms typically favour new builds through capital funding rather than maintenance, patching and updates, support and skills development, all of which draw on operating expenditure.

That funding pattern invariably means that the newest, shiniest bit of kit gets the most attention—attention that in many instances may be better devoted to the legacy systems and processes within which the new piece of kit is nested. The result is the steady accretion of complexity, cost and vulnerability.

A good deal of cybersecurity is about doing the technology well. But that requires constant attention, adaptability, and a sound partnership with and knowledge of the business; good governance and culture; expertise and capability; and sufficient funding. And, of course, we are simply not as good as we think we are—or should be—at building, integrating or running highly complex, distributed and changeable technological systems.

External costs and inertia may be found in the modern legislative environment. Thickets of regulations impair flexibility, adaptiveness and capability. They tend to encourage compliance, focusing minds on ‘ticking boxes’, often with little effect on actual cybersecurity.

Given the costs and difficulties, notions that government should resolve other organisations’ cyber problems are likely to be short-lived: the complexity, costs, resources and assumption of liability required are simply too great.

It’s unrealistic to expect a government agency to possess sufficient capability or awareness of an external organisation’s local systems or activities to protect it without risking damage to that organisation’s business. Imposing standardisation risks imposing additional costs and impairing adaptiveness, the ability to learn and overall resilience.

Within the older Western democracies—weakened by populism and sclerotic economies—it may be tempting simply to exert top-down control rather than undertake the difficult work of empowering individuals and building trusted communities. That temptation increases particularly as China strengthens its economic and technological prowess.

But restricting the ability of individuals and organisations to secure their interests and identity and control their own data does not simply undermine the cyber resilience of individuals, organisation and society. It also risks our credibility and our identity as a Western, liberal democracy.

Cybersecurity is just as challenging and contentious as other areas of security in our increasingly unsettled world—perhaps even more so, given its reach into our everyday lives.

It’s difficult to argue that here, too, we are doing things right, let alone doing the right thing.

Those of us older than a certain age will recall an excellent British television series, Yes, Minister, and its successor, Yes, Prime Minister: they were required viewing for young and enthusiastic public servants in Canberra.

One of the more memorable episodes, ‘The compassionate society’, involved a hospital with no patients, though it did have 500 administrators and ancillary workers to ensure things ran smoothly. Not having patients both prolonged the life of the facility and cut running costs, ensuring it was one of the best-run hospitals in Britain.

Unfortunately, we find the same attitudes in other walks of life. The ‘socio-technical divide’ is a well-known phenomenon in technology. If only people weren’t involved, or just did what we told them, we’d have perfect systems.

That also reflects a lot of thinking and commentary around cybersecurity—‘people are the problem’. After all, most intrusions and attacks start with people being persuaded or misled into going onto disguised or infected sites, to handover details or otherwise compromise their own systems. One estimate is that 94% of malware is delivered via email—phishing—which requires someone to preview, open and/or click on a link or file. If only people—users, clients, members of the community—didn’t do what people naturally do, we’d all have much more secure and efficient systems.

That’s muddled thinking.

First, people—thankfully—are messy. They’re changeable. They work in a variety of different styles, with different information. They desire both privacy and openness—mediated on their own terms, and with people whom they get to choose. They’re impatient, focused, curious, biased, distracted, inspired and sometimes plain lazy. They’re driven by motivations that generally have little do with the incentives of engineers and managers who build and oversee systems and data.

They’re also the reason why we build technologies, systems, organisations and institutions to start with—to enable, support, help and entertain. We need more than cybersecurity specialists; we need good people to conceive, construct and care for good, adaptable, human-centred, secure, resilient systems, that account for the people who use or are supported by them.

Technology is inherently reductionist: that it fails to capture the complexity of humans and their systems should be unsurprising. Despite attempts to map ‘personas’ or ‘life journeys’, technological systems will always struggle to keep up—there’s no universal, singular human experience. The fact that systems and system design either neglect or fail to accommodate the messiness of people and their underlying needs is fundamentally a human concern. That’s even more so as those same technologies become increasingly embedded in in our daily lives.

Second, the line that ‘people are the problem’ usually applies to the faceless masses. It tends to overlook that systems designers, engineers, managers, vendors and government ministers are also people and no less prone to the same messiness, incomplete decision-making, changeability and biases as anyone else. They make mistakes, too. They respond to their own set of incentives, not necessarily others’, or society’s for that matter. And they tend to build systems that favour their own position and point of view.

Third, the systems themselves that people design, approve and administer are no less flawed. Moreover, there’s a case that the consequential misunderstandings, misinterpretations and misconfigurations are so deep in both practice and theory that everything is broken.

The result is that the whole, inevitably, is deeply complex. Complex systems have a variety of properties that make them unpredictable and less tractable to top-down, centralised control.

In such systems, cause and effect are rarely linear. Simple actions may have extraordinary effect, while massive effort may yield little change. Failures may cascade across systems, which may have networks, dependencies and behaviours previously unsurfaced and so unrecognised by managers, administrators and technical staff.

Systems and stakeholders—and their expectations—co-evolve, so that what worked once or in the last funding cycle, may not do so again. And preventive action may easily create increased rigidity and tension in the system. It may be better to allow small failures—analogous to backburning, for example—if they dilute the prospects for major conflagration or collapse.

In such circumstances, attacking officials for a failure—‘heads will roll’—or blaming the victim does little to encourage staff to learn and to build resilience. Instead, it may well increase the chances of greater systemic failure later. The slow work of building human capability and a systems-level understanding is likely to yield better results than firing staff or micromanaging.

Last, we live in a liberal, Western, free-market democracy. Democracies give preference to individuals and their rights, freedoms and opportunities.

Democracies are being challenged by authoritarian regimes and illiberal thinking, including within the democracies themselves. To many—disillusioned by the failure of democratic government to cope with the turmoil caused by digital disruptions, by inequality, by discrimination and biases, and by failures in corporate governance—a populist, or even an authoritarian or strongman approach, looks attractive. It offers simple and direct action and solutions. The lack of consultation, and of transparency, is seen not as an impediment but as an enabler. And authoritarian systems are often able, at least initially, to generate more immediate and focused outcomes.

But a fundamental strength of democracies is that, by investing in individuals and giving them the freedom, and the ability, to create, build, prosper and take a large measure of responsibility for their own wellbeing, they build both legitimacy and resilience that authoritarian societies lack.

Increasingly, that’ll include their online activities as well. In a complex system, government has little hope of ensuring safety and security for all. Technological fixes will be quickly overtaken; social fixes, including legislation, risk impairing people’s ability to look after themselves; and both, imposed with little understanding or consultation, will erode trust, legitimacy, resilience and the government’s own ability to enact change.

The discussion paper released by the Department of Home Affairs on a new cybersecurity strategy raised a multitude of questions—literally. That reflects the sheer complexity of the problem at hand. And if everything is indeed broken, reaching for quick technological or even legislative fixes is likely to add further complexity, increase fragility, increase insecurity and impair resilience.

And so, rather than assuming that ‘fixing’ cybersecurity and improving safety are matters for the central government—which has stretched resources and fewer levers than it once had to enact change—decision-makers would do well to place citizens at the centre of the challenge, and to ask themselves, ‘How can we best help our people to help themselves?’

Now more than ever is the time to double down on our democratic impulses, rather than seeking false shelter in an omnipotent government.

Australian National University Vice Chancellor Brian Schmidt’s public release of a detailed report on the damaging cyberattack on ANU systems and data marks a refreshing shift in behaviour on cybersecurity for Australian public institutions.

The report is a candid, forensic account of the relentless, capable and aggressive attack on ANU systems by a sophisticated attacker between November 2018 and May 2019. It’s equally revealing about the high-end forensic and protective response that the ANU cyber team embarked on, in cooperation with Northrop Grumman and government agencies, once the breach was discovered in April.

The hacker seems to have had undisturbed access to ANU systems for half a year. They were sufficiently sophisticated to use three main avenues of attack: credential theft (to get logins to access systems), infrastructure compromise (to gain control of ANU systems and devices) and data theft (through simple (email) and more complex (encryption and compression) techniques).

In a mark of high-end capability beyond garden-variety hackers, when they couldn’t get into their primary target—ANU’s enterprise systems domain (ESD)—after repeated attempts with readily available techniques, they used much more sophisticated ‘bespoke source code or malware’ that they downloaded and then ran on the ANU system. That’s how they broke into the ESD and get hold of at least some of the personal data that seems to have been their goal.

The other distinctive feature that shows the attacker had skills beyond most is what the report tells us about the ‘hacker hygiene’ they displayed—cleaning up and removing the traces of their activity as they worked. The clean-up was so adept and focused that, but for a lucky firewall change in November that shut the attacker out of a compromised ANU computer (attack station one in the report), little of the forensic detail in the report would have been available. That’s because the attacker hadn’t finished cleaning up the compromised computer before they lost access to it.

It seems that what was taken is less than the 19 years of ANU student and staff details that was initially feared, because of calculations about how, and for how long, data was exfiltrated by the attacker.

So why did this hacker decide use their high-end tools against this prestigious Australian university? That can’t be known with certainty, but it’s always useful to go back to the Romans when investigating human behaviour. At the heart of Roman justice was addressing the key question, Cui bono? Who benefits?

Personal data on ANU students and staff of the type held in the ANU’s ESD (names, addresses, contact details, tax file numbers and bank account details) is interesting, but by itself it’s of limited value. A criminal hacker could sell it for gain, but the ANU says there’s no indication that has happened.

Perhaps the attacker wanted this personal data first, which was why they were relentless in targeting the system that held it. But they may also have intended to hang around inside the ANU’s network, with all the opportunities that controlling an internal system and networks would provide. If that had happened, ANU research data would have been at risk. And while much ANU research is published openly, information about the lines of inquiry ANU experts have followed and found fruitless is usually not. There’s also the obvious value of intellectual property across scientific, mathematical and other ANU research areas.

Overall, though, given the hacker’s priority on targeting personal data, the most likely explanation is that they were thinking of combining the stolen ANU data with other data they already held or were getting from elsewhere. As with any of our universities, some of the ANU’s graduates go on to become highly successful corporate, political and government (ministerial and official) people—not just leaders but capable technical experts. Personal information about such people would be of obvious value to a foreign intelligence agency. So, taking the Roman view, it seems most likely the attacker was a state-connected entity, contributing to foreign espionage work. We won’t know which one unless the forensic evidence available to the government and the ANU gives some clues.

The vice chancellor is right to not make attribution judgements. It’s not really the role of a university to take on a nation-state or to engage in law enforcement. That’s the role of government.

The lessons from the ANU experience are disturbing and stark. ANU had a range of ‘normal’ cybersecurity measures in place across its networks, but they were clearly insufficient. It was only because ANU had already started to lift its cybersecurity practices and investment by April that the attacker was discovered (the report notes this happened because of a ‘baseline threat hunting exercise’).

So, every public institution or company needs to examine its own practices. If it doesn’t go beyond standard protective security—firewalls, antivirus measures, intrusion detection software—it should consider whether it needs to take more active measures within and across its internal systems.

The ANU attack is also a reminder that systems and IT investment are not enough. Strong security awareness and practice by all the people in an organisation is essential to reducing the risk of cyber compromise.

And it shows what everyone knows intellectually to be true, but what a real-world example brings home in a much more palpable way: if you hold data—particularly personal data, but also data aggregations of almost any kind—it is valuable to someone. If you fail to protect it, your organisation may suffer financial loss through lost opportunity to use intellectual property, but, as importantly, if you compromise the personal data of your customers, your own people, or your partners, you will suffer reputational damage that is hard to repair.

The ANU incident report provides a menu of questions and actions for all of our universities and should be required reading for all vice chancellors. It is also a great prompt for any corporate board or CEO who wants to know more about what those cybersecurity folk in IT are and are not up to.

The last lesson from the ANU experience is one for government. Naming cyber attackers, particularly when they are state actors, is an essential part of deterrence and security. Naming and shaming may not prevent a motivated state actor from conducting further attacks, but it creates awareness of real, as opposed to hypothetical, threats. It also creates the opportunity for others to speak up and act collectively against the perpetrators. And only governments really have the horsepower and status—let alone responsibility—to bring the actions of other states to public account and attention.

It may not be possible to name the attacker in this case. But in instances where attribution is clear—as is almost certainly the case with the recent hacks into our parliament and major political parties—they should be named. Not doing so is like coming home to a burgled house, knowing who the burglar was, but still having them over to dinner that night and keeping silent about the mess around the table. That doesn’t fix the problem; it only provides a licence for further bad behaviour.

Let’s see this ANU report as the start of a healthy shift for all Australian institutions in publishing details of cyber incidents and their responses. Greater openness about such incidents will build a body of knowledge and good practice that will make us all safer in our online activities and more able to trust the institutions that hold our data and our knowledge.

In Australia, the federal, state and territory governments have defined critical infrastructure as: ‘Those physical facilities, supply chains, information technologies and communication networks which, if destroyed, degraded or rendered unavailable for an extended period, would significantly impact the social or economic wellbeing of the nation, or affect Australia’s ability to conduct national defence and ensure national security.’

Critical infrastructure is an area where the cyber and physical worlds are converging—the operation of digital systems affects our physical world, and so a cyber incident can have direct and serious physical impacts on property and people.

The security risks are far from just theoretical and have been known for many years. In 2001, a disgruntled ex-employee hacked the systems at a sewage treatment works run by the Maroochy Shire Council in Queensland causing the release of raw sewage into freshwater systems. In recent years the Stuxnet attack on Iranian nuclear facilities and numerous attacks on communications networks in Ukraine, attributed to Russia, have shown countries are active in this area. Few people would contest that ensuring the cyber safety of operational systems in our critical national infrastructure should be a key priority.

However, research carried out for my recent ASPI paper shows that more can and should be done. While there is a recognition of the risk at a conceptual level there is a strong feeling that it is not getting the attention it needs from senior decision-makers. Why is this, and what should we be doing about it?

Firstly, we need to ensure awareness of the differences around securing ‘operational technology’ (OT) systems. In recent years we have made great strides forward in understanding and mitigating information security risks, but often these lessons don’t translate well into the OT world. OT equipment has a long lifetime (10–20 years, compared to five years for typical IT equipment), making it difficult to keep it supported and up-to-date. Even if updates are available, it’s often not feasible to take systems offline to apply them. All the latest artificial intelligence solutions trained to detect IT attacks may completely miss an cyberattack on OT.

The next step is to encourage and empower boards to explicitly understand and define their cyber risk appetite. Critical infrastructure in Australia consists of a whole range of sizes and types of organisations—from small regional councils operating water and sewerage systems to large multinational companies. Varied approaches will be needed, including defining standards and getting the right corporate governance and regulation.

Organisations need to have a clear understanding of the consequences and potential risks of their actions. The risk calculus may be different where the potential impact is catastrophic failure of key infrastructure and this may drive better decision-making on how digital transformation is applied, as well as how mitigation plans are put in place to enable successful change.

Finally, we need to ensure that the right resources and solutions are available to help mitigate risks. This should include programs to educate and develop the workforce, but also ensure better sharing of threat intelligence between government and industry, as well as between businesses. In his address to the ASPI National Security Dinner earlier this year, former NSA chief Mike Rogers made a convincing call for a stronger real-time partnership between critical infrastructure players and the government.

We are at an inflection point in the convergence of physical and cyber systems—the people we spoke to for our research said there had been little change in the last two years, but they expect this convergence to accelerate in the next two years. The arrival of 5G and the internet of things are just two of the factors driving this.

The history of the internet shows that too often we have been playing catch-up and trying to apply security as an afterthought. In this field we have an opportunity to change that narrative. However, none of the suggestions above are quick fixes, so we need to prioritise resources and get moving quickly before technology overtakes us.

Earlier this year, American officials acknowledged that US offensive cyber operations had stopped Russian disruption of the 2018 congressional election. Such operations are rarely discussed, but this time there was commentary about a new offensive doctrine of ‘persistent engagement’ with potential adversaries. Will it work?

Proponents of ‘persistent engagement’ have sought to strengthen their case by arguing that deterrence does not work in cyberspace. But that sets up a false dichotomy. Properly used, a new offensive doctrine can reinforce deterrence, not replace it.

Deterrence means dissuading someone from doing something by making them believe that the costs to them will exceed their expected benefit. Understanding deterrence in cyberspace is often difficult, because our minds remain captured by an image of deterrence shaped by the Cold War: a threat of massive retaliation to a nuclear attack by nuclear means. But the analogy to nuclear deterrence is misleading, because, where nuclear weapons are concerned, the aim is total prevention. Deterrence in cyberspace is more like deterring crime: governments can only imperfectly prevent it.

There are four major mechanisms to reduce and prevent adverse behaviour in cyberspace: threat of punishment, denial by defence, entanglement and normative taboos. None of the four is perfect, but together they illustrate the range of means by which it is possible to minimise the likelihood of harmful acts. These approaches can complement one another in affecting actors’ perceptions of the costs and benefits of particular actions, despite the problem of attribution. In fact, while attribution is crucial for punishment, it is not important for deterrence by denial or entanglement.

Because deterrence rests on perceptions, its effectiveness depends on answers not just to the question of ‘how’ but also to the questions of ‘who’ and ‘what’. A threat of punishment—or defence, entanglement or norms—may deter some actors but not others. Ironically, deterring major states from acts like destroying the electricity grid may be easier than deterring actions that do not rise to that level.

Indeed, the threat of a ‘cyber Pearl Harbor’ has been exaggerated. Major state actors are more likely to be entangled in interdependent relationships than are many non-state actors. And American policymakers have made clear that deterrence is not limited to the cyber realm (though that is possible). The United States will respond to cyberattacks across domains or sectors, with any weapons of its choice, proportional to the damage that has been done. That can range from naming and shaming to economic sanctions to kinetic strikes.

The US and other countries have asserted that the laws of armed conflict apply in cyberspace. Whether or not a cyber operation is treated as an armed attack depends on its consequences, not on the instruments used. And this is why it is more difficult to deter attacks that do not reach the equivalence of armed attack. Russia’s hybrid warfare in Ukraine and, as the report by US Special Counsel Robert Mueller has shown, its disruption of the US presidential campaign fell into such a grey area.

Although ambiguities of attribution for cyberattacks, and the diversity of adversaries in cyberspace, do not make deterrence and dissuasion impossible, they do mean that punishment must play a more limited role than in the case of nuclear weapons. Punishment is possible against both states and criminals, but its deterrent effect is slowed and blunted when an attacker cannot be readily identified.

Denial (through hygiene, defence and resilience) plays a larger role in deterring non-state actors than major states, whose intelligence services can formulate an advanced persistent threat. With time and effort, a major military or intelligence agency is likely to penetrate most defences, but the combination of threat of punishment and effective defence can influence their calculations of costs and benefits. This is where the new doctrine of ‘persistent engagement’ comes in. Its goal is not only to disrupt attacks, but also to reinforce deterrence by raising the costs for adversaries.

But policy analysts cannot limit themselves to the classic instruments of nuclear deterrence—punishment and denial—as they assess the possibility of deterrence and dissuasion in cyberspace. They should also pay attention to the mechanisms of entanglement and norms. Entanglement can alter the cost-benefit calculation of a major state such as China, but it probably has little effect on a state such as North Korea, which is weakly linked to the world economy.

‘Persistent engagement’ can, however, aid deterrence in such difficult cases. Of course, entering any adversary’s network and disrupting attacks poses a danger of escalation. Rather than relying just on tacit bargaining, as proponents of ‘persistent engagement’ often emphasise, more explicit communication may help.

Stability in cyberspace is difficult to predict, because technological innovation there is faster than in the nuclear realm. Over time, better attribution forensics may enhance the role of punishment and encryption or machine learning may increase the role of denial and defence.

Cyber learning is also important. As states and organisations come to understand better the limitations and uncertainties of cyberattacks and the growing importance of the internet to their economic wellbeing, cost-benefit calculations of the utility of cyberwarfare may change. Not all cyberattacks are of equal importance; not all can be deterred; and not all rise to the level of significant threats to national security.

The lesson for policymakers is to focus on the most important attacks, recognise the full range of mechanisms at their disposal and understand the contexts in which attacks can be prevented. The key to deterrence in the cyber era is to acknowledge that one size does not fit all. ‘Persistent engagement’, when viewed from this perspective, is a useful addition to the arsenal.

Imagine this. You wake up in 2022 to discover that the Australian financial system is in crisis. Digital land titles have been irreversibly altered, and it’s impossible for people and companies to prove that they own their assets. The essential underpinning of Australia’s multitrillion-dollar housing market—ownership—is thrown into question. The stock market goes into freefall as confidence in the financial sector evaporates. Banks cease all property lending and stop any business lending that has property as collateral. The real estate market, insurance market and ancillary industries come to a halt. The economy begins to lurch.

At the same time, a judge’s clerk notices an error in an online reference version of an act of parliament. It quickly emerges that a foreign actor has cleverly tampered with the text, but it’s unclear what other parts of the legislation have been changed or whether other laws have been altered. The whole court system is shut down as the entire legal code is checked against hardcopy and other records and digital forensics continue.

Meanwhile, a ransomware attack has locked up the digital archives of Australia’s major media organisations and parallel archival institutions. Over 200 years of stories about the nation are suddenly inaccessible and potentially lost.

As the Australian public and media are demanding answers, the government is struggling to deal with the crisis. Paper copies of many key documents simply don’t exist.

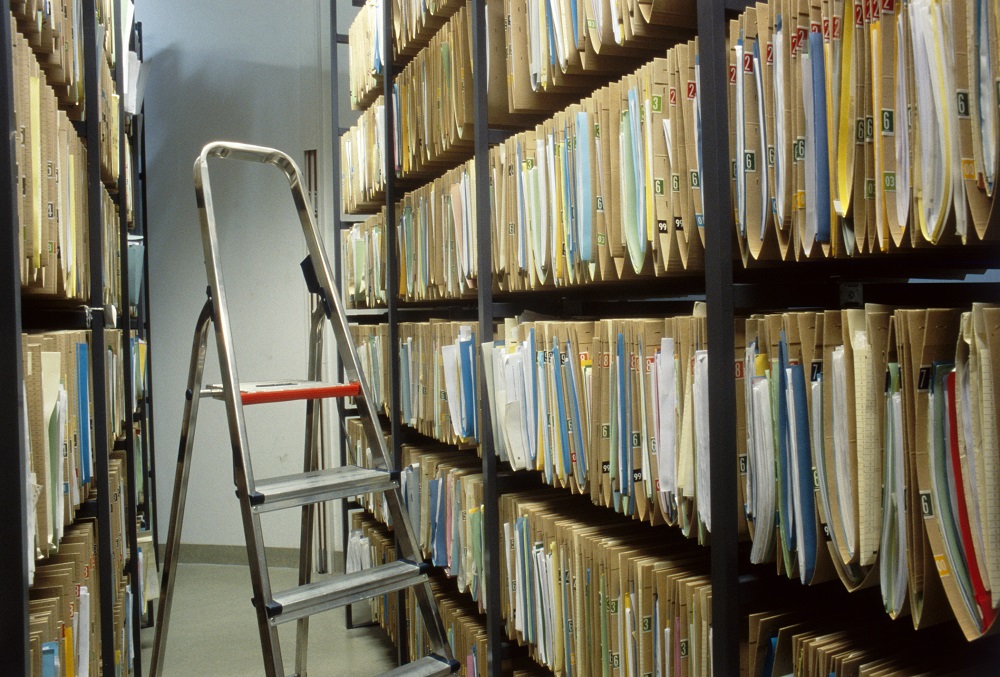

National identity assets are the evidence of who we are as a nation—from our electronic land titles and biometric immigration data, to the outcomes of our courts and electoral processes and the digital images, stories and national conversations we’re having right now.

Increasingly, our national footprint and interactions are digital only, including both digitally born and digitalised material. The electronic repository is quickly becoming the primary source of truth—the legal and historical evidence we rely on now and into the future.

Keeping national identity assets safe and accessible is vital not only for chronicling Australia’s past, but for supporting government transparency and accountability, the rights and entitlements of all Australians, and our engagement with the rest of the world.

In my report for ASPI’s International Cyber Policy Centre, Identity of a nation: protecting the digital evidence of who we are, released today, I argue that our national identity assets are a prime and obvious target for adversaries looking to destabilise and corrode public trust in Australia.

According to the Australian Cyber Security Centre, 47,000 cyber incidents occurred in Australia in 2016–17, a 15% increase on the previous year. Both state and non-state adversaries have the capability to disrupt, distort and expropriate national identity data. So far, they haven’t targeted our national identity assets—but that could change, at any time.

Many national data assets are held electronically by government agencies, archives, records agencies, libraries and other recordkeeping institutions. Because we haven’t anticipated sophisticated cyberattacks against the organisations holding these assets, and because the data is generally undervalued, the protections in place are inadequate.

In addition to the risk of data loss, there’s also a risk of a loss of trust in the organisations and governments that hold national identity assets.

National and state government archives and other record-holding institutions play the role of ‘impartial witnesses’, identifying and storing this information and holding the government to account under the rule of law and in the ‘court’ of history. We need to trust that these impartial witnesses can identify, keep and preserve this evidence.

If we aren’t vigilant, we run the risk that adversaries could destroy or manipulate our national identity assets, compromising the digital pillars of our society and culture.

States such as Russia have demonstrated their intention to disrupt and undermine Western democracies, and obvious future targets for such attacks are national identity assets that are poorly protected and offer high-impact results if disrupted, corrupted or destroyed.

With more than 30 countries known to possess offensive cyber capabilities, and cyber capabilities being in reach of non-state actors from individuals to cybercrime organisations, the number of potential adversaries able to target our national identity assets is significant and increasing.

The Australian government’s Critical Infrastructure Centre and Australian Cyber Security Centre should address gaps in our national infrastructure and information security by including national identity assets in our critical infrastructure framework and closely aligning digital preservation and information security.

We also need to develop ways of better identifying and valuing national identity digital assets and to give more attention to information governance. Australian governments—state and federal—need to ensure that our critical government-held national identity assets are protected and institutions charged with their care are adequately funded to do so.

Until these issues are addressed, the increasing vulnerability, invisibility and potential loss of the digital evidence of who we are as a nation remains a sleeping, but urgent, national security issue.

Australia’s silence on the attribution of cyberattacks and intellectual property theft emanating from China is representative of a broader silence on irritants and fundamental differences in the bilateral relationship. This silence does not serve Australia’s national interest. Worse, it doesn’t actually work.

Australian industry seeks a stable relationship that facilitates the free flow of goods and services. Universities want stronger and deeper collaboration as China and its institutions prioritise cutting-edge science and technology. In a perfect world, these groups would benefit from a relationship that leveraged complementary skills and expertise and was free of quarrels, tensions and the winds of global politics. This would be—to borrow Chinese Communist Party talking points—a ‘win–win’ for Australia’s economy and our research sector.

But such a relationship is difficult to achieve at a time when, as our economic and research interests converge, the gap between Australian and Chinese societal values, strategic interests and commitment to a rules-based international order continues to widen. And unfortunately, the differences are only becoming starker as Beijing uses its growing power.

Despite our own obvious political challenges, the Australian government exists to empower individuals and is bettered by being transparent, accountable, and committed to values like multiculturalism and a free and inquisitive media. These values aren’t shared by all of our international partners, the CCP included, but they must remain non-negotiable.

There is a lot at stake in the China–Australia relationship and, as we attempt to balance these economic and security interests, there is an element of schizophrenia to our attempts to ‘get the China relationship right’. It’s a deeply important relationship but it’s also incredibly complicated and it’s only going to get more so. There is no right path forward that will please everyone.

But there is a wrong path, and we are in danger of taking it.

In what look like anxious efforts to ‘manage’ all aspects of the China relationship and deny the existence of key differences, what has become starkly apparent is what’s not being said. Even in an environment where the government has made some tough and important decisions, including on the exclusion of ‘high-risk vendors’ from the 5G network and the passing of legislation on foreign interference.

In fact, listening to the thunderous silence that now so often accompanies international policy developments has become as important as taking note of what is said. Over the years, a new international and security policy norm has emerged.

To maintain what we believe are good bilateral relations and in an effort to prevent strident objections from the CCP, its state-owned media and occasionally our own companies, we say less—with very few exceptions—even though there is more to say than ever before.

In a bipartisan phenomenon, we have examined our relationship with China and decided, in contrast to all others, to walk on eggshells and handle it with kid gloves. In so doing, we allow the Chinese state to set new precedents without being challenged—on cyberattacks and economic espionage, on political interference and coercion, and on human rights (notwithstanding Foreign Minister Marise Payne’s recent comments on human rights abuses in Xinjiang).

Left unaddressed, these emerging norms and precedents will take us further and further away from Australia’s values and interests and contribute to a further degradation of the international rules-based order that has so beautifully and conveniently ensured Australia’s security and prosperity to date.

This silence can be clearly seen in the Australian government’s (and other Western governments’) consistent failure to publicly attribute malicious cyber behaviour targeting Australian citizens, businesses and government bodies to the Chinese state—while being comfortable calling out examples of Russian cyber intrusion occurring far from our shores.

In early 2017 Australia and China agreed ‘not to conduct or support cyber-enabled theft of intellectual property, trade secrets or confidential business information with the intent of obtaining competitive advantage’. While this may have led to a short-term improvement in behaviour, in June this year we learned from media reporting that China-based hackers had ‘utterly compromised’ the Australian National University’s computer network. One intelligence official told the Nine Network’s Chris Uhlmann that ‘China probably knows more about the ANU’s computer system than it does.’

Last week, Fairfax Media reported that China’s Ministry of State Security had ‘directed a surge in cyber attacks on Australian companies over the past year’. Soon after, and once again through the media, we learned of reports of alleged targeted data theft enabled by state-owned enterprise China Telecom, which is reported to have diverted internet traffic destined for Australia via mainland China, logging this diversion as a ‘routing error’.

In contrast to our silence on Chinese cyber behaviour, over the past year and through a string of different ministers and departments, the Australian government publicly attributed malicious cyber behaviour to Russia, Iran and North Korea. Last month, Australia even attributed the 2015 hacking of a small UK TV station’s email accounts to the Russian military. That’s strange, given that we’re yet to name the offender(s) behind many costly attacks against Australian targets.

Of course, the naming-and-shaming approach doesn’t always work—it’s unlikely to dissaude the ‘hermit kingdom’ of North Korea, for example. But there are other approaches we should also be exploring to better deter such behaviour. The US Department of Justice has attempted to do so by increasingly using indictments and charging Chinese, Russian and Iranian military or state-supported hackers believed to have crossed from intelligence gathering into criminal activity.

Avoiding talking about the complicated aspects of our relationship with the Chinese state is deeply problematic for a range of reasons. First, it doesn’t work. There is no proof that adherence to the CCP’s long-standing demand that criticism and tensions be raised only behind closed doors has actually resulted in any long-term changes in behaviour. Not only is this approach in stark contrast to China’s own actions, but when it comes to malicious behaviour in cyberspace, for example, research from ASPI’s International Cyber Policy Centre and reporting from Fairfax show us that there’s actually proof to the contrary.

Second, in treating the bilateral relationship with China differently, strategic and policy decision-making has become clouded. This process has become characterised by self-censorship and groupthink that indicates a belief that acting in Australia’s interests will result in unnecessary damage to the broader diplomatic relationship. In fact, we should probably take comfort in China’s pragmatism. Despite a degree of hysteria before key policy announcements, the main reaction we have seen to recent decisions to implement foreign interference laws and reject Chinese investment in critical infrastructure is one of business as usual.

Third, treating China differently fosters an environment characterised by pre-emptive decision-making and, again, self-censorship. The narrative that ‘there is a broader diplomatic relationship to consider here’ leaves little space for a contest of policy ideas in a relationship that needs and deserves a constant injection of creative thinking.

Finally, and most importantly, public attribution and indictments are far more than diplomatic tools. The real issue is that in trying to protect the relationship with Beijing, the government is not being open with the Australian public, who have the right to be informed about new and emerging risks to their businesses, intellectual property and online safety. Regardless of where these threats emanate from, they must be discussed, understood and dealt with. Not doing so is doing a disservice to the public and sends the wrong message to industry and civil society, which are also navigating their own China relationships.

Why, for example, should universities be more open and forthright about their experiences with Chinese hacking in the absence of federal government leadership? We need our universities and businesses to be open about all of the serious cybersecurity threats they face, rather than, as Cyber Security Cooperative Research Centre CEO Rachael Falk has written, continue with the common narrative that they didn’t know about a cyber breach or that no data was stolen.

But the government can only hold others to a standard that it is also prepared to sign up to and it must lead by example. Some government departments are becoming more active in raising such threats with industry at senior levels. This is a welcome step but should be expanded.

To borrow the Australian bureaucracy’s own talking points, the government should take a ‘country agnostic’ approach to cyber attribution and the indictment of hackers. Tough decisions made on foreign interference or critical infrastructure should be publicly explained more often and more clearly. Leading by example starts at the top and must involve both politicians (who come and go) and the senior bureaucracy (which is often the only constant in our relationship with China).

It’s time to stop avoiding, and instead start normalising, talking about the problems in Australia’s increasingly vital but increasingly complicated relationship with China.