Social Credit

Technology-enhanced authoritarian control with global consequences

What’s the problem?

China’s ‘social credit system’ (SCS)—the use of big-data collection and analysis to monitor, shape and rate behaviour via economic and social processes1—doesn’t stop at China’s borders. Social credit regulations are already being used to force businesses to change their language to accommodate the political demands of the Chinese Communist Party (CCP). Analysis of the system is often focused on a ‘credit record’ or a domestic ranking system for individuals; however, the system is much more complicated and expansive than that. It’s part of a complex system of control—being augmented with technology—that’s embedded in the People’s Republic of China’s (PRC’s) strategy of social management and economic development.2 It will affect international businesses and overseas Chinese communities and has the potential to interfere directly in the sovereignty of other nations. Evidence of this reach was seen recently when the Chinese Civil Aviation Administration accused international airlines of ‘serious dishonesty’ for allegedly violating Chinese laws when they listed Taiwan, Hong Kong and Macau on their international websites.3 The Civil Aviation Industry Credit Management Measures (Trial Measures) that the airlines are accused of violating were written to implement two key policies on establishing the SCS.4

As businesses continue to comply, the acceptance of the CCP’s claims will eventually become an automatic decision and hence a norm that interferes with the sovereignty of other nations. For members of the public on the receiving end of such changes, the CCP’s narrative becomes the dominant ‘truth’ and alternative views and evidence are marginalised. This narrative control affects individuals in China, Chinese and international businesses, other states and their citizens.

What’s the solution?

Democratic governments must become more proactive in countering the CCP’s extension of social credit. This includes planning ahead and moving beyond reactive reciprocal responses. Democratic governments can’t force firms to refuse to comply with Beijing’s demands, but they also shouldn’t leave businesses alone to mitigate risks that are created by the Chinese state’s actions. Democratic governments should identify the potential uses of certain technologies with application to the Chinese state’s SCS that could have serious human rights or international security implications. Export controls that prevent supplying or cooperating to develop such technologies for the Chinese state would buy time, but this is only a short-term and partial solution. Where social credit extends beyond China’s borders, the penetration is often successful through the exploitation of existing weaknesses and loopholes in democratic countries. A large part of the solution for addressing these easily exploitable weaknesses is through strengthening our own democracies. Issues such as data protection, investment screening and civil liberties protection are most pressing. Transparency, while not a solution, will help to identify breaches and to prosecute abuses where necessary. Steps must be taken to shield overseas Chinese communities from the kinds of CCP encroachment that will only proliferate with a functioning and tech-enabled SCS.

China’s social credit system

China’s SCS augments the CCP’s existing political control methods. It requires big-data collection and analysis to monitor, shape and rate behaviour. It provides consequences for behaviour by companies and individuals who don’t comply with the expectations of the Chinese party-state. At its core, the system is a tool to control individuals’, companies’ and other entities’ behaviour to conform with the policies, directions and will of the CCP. It combines big-data analytic techniques with pervasive data collection to achieve that purpose.

Social credit supports the CCP’s everyday economic development and social management processes and ideally contributes to problem solving. That doesn’t make social credit less political, less of a security issue or less challenging to civil liberties. Instead, it means that the threats that this new system creates are masked through ambiguity. For the system to function, it must provide punishments for acting outside set behavioural boundaries and benefits to incentivise people and entities to voluntary conform, or at least make participation the only rational choice.

Social credit and the technology behind it help the Chinese party-state to:

- Control discourse that promotes the party-state leadership’s version of the truth, both inside and outside China’s geographical borders

- integrate information from market and government sources, optimising the party-state’s capacity to pre-empt and solve problems, including preventing emerging threats to the CCP’s control

- improve situational awareness with real-time data collection, both inside and outside China’s geographical borders, to inform decision-making

- use solutions to social and economic development problems to simultaneously augment political control.

Source: Created by Samantha Hoffman, June 2018.

Extending control outside the PRC’s borders

For decades, the CCP has reached beyond its borders to control political opponents. Tactics are not changing under Xi Jinping, but techniques and technology are. For example, in several liberal democracies, Chinese officials have harassed ‘Xi Jinping is not my president’ activists and their families after messages were posted to WeChat.5 Research for this report also found other examples of harassment, including attempts by Chinese officials to coerce overseas Chinese citizens to install surveillance devices in their businesses.6 More commonly, the CCP doesn’t exert control overseas with direct coercion. Instead, it uses ‘cooperative’ versions of control.

For example, a function of Chinese student and scholar associations — which are typically ties to the CCP7 — is to offer services such as airport pick-up.8 Beyond providing necessary services, these techniques reinforce the simple message that the CCP is everywhere (and so are its rules). Social credit embeds such existing processes in a new toolkit for regulatory and legal enforcement.

On 25 April 2018, the Chinese Civil Aviation Administration accused United Airlines, Qantas and dozens of other international airlines of ‘serious dishonesty’ for allegedly violating Chinese laws in how they listed Taiwan, Hong Kong and Macau on their websites.9 To clarify: those websites, which belong to international companies, are for global clients. The Chinese authorities said failure to classify the places as Chinese property would count against the airlines’ credit records and would lead to penalties under other laws, such as the Cybersecurity Law.

The Planning Outline for the Construction of a Social Credit System (2014–2020) (the Social Credit Plan) specifically identified ‘improving the country’s soft power and international influence’ and ‘establishing an objective, fair, reasonable and balanced international credit rating system’ as goals.10

The goals aren’t credit ratings like those done by Standard & Poor’s or Moody’s, but are instead about ensuring state security. State security here, though, is not the simple protection of domestic and foreign security.11 It’s also about protecting the CCP and securing the ideological space both inside and outside the party. That task transcends geographical borders.

The Civil Aviation Industry Credit Management Measures that the airlines are accused of violating were written to implement two key policy guidelines on establishing China’s SCS. The measures are among many other implementing regulations of the Social Credit Plan. Social credit was used specifically in these cases to compel international airlines to acknowledge and adopt the CCP’s version of the truth, and so repress alternative perspectives on Taiwan. Shaping and influencing decision-making is a pre-emptive tactic for ensuring state security and party control. The CCP deals with threats by ‘combining treatment with prevention, but primarily focusing on prevention.’12 That doesn’t make the outcome less coercive.

Social credit records (for individuals and entities) are the outcome of data integration. Technical capacity for data collection and management, therefore, is the key to realising the envisioned SCS.13 Data integration and management don’t simply aid the process of putting individuals or entities on lists. They also support decision-making—some of which ideally will be done automatically through algorithms—and enhance the CCP’s awareness of the PRC’s internal and external environments. The key to understanding this aspect of social credit is the first line of the Social Credit Plan. The document says that social credit supports ‘China’s economic system and social governance system’.14 Social credit is about problem-solving but it’s also designed to thrive on its own contradictions, just like the social governance process (hereafter ‘social management’) that it supports.15 Social management isn’t simply the management of civil unrest. Social management as a concept requires the provision of services and the use of normal economic and social management to exert political control. Yet therein lies the contradiction: the Chinese state does not prioritise solving problems above political security. In fact, problem solving is simultaneously directed at political security. The system will also increasingly rely on technology embedded in everyday life to manage social and economic development problems while simultaneously using the same resources to expand control. Understanding this dual-use nature of the SCS is the key: the system’s ability to solve and manage problems does not diminish its political or coercive capacity.

Credit records are global and political

A January 2018 article published by the Overseas Chinese Affairs Office of the State Council for the attention of ‘overseas Chinese and ethnic Chinese’ (华侨华人) warned that the Civil Aviation Industry Credit Management Measures also applied to them.16 Violations would lead to greylisting and blacklisting and would be included in individuals’ and organisations’ overall credit records, it said. Importantly, ‘overseas Chinese and ethnic Chinese’ can cover anyone who the CCP claims is ‘Chinese’, whether or not they have PRC citizenship. In addition to expatriates, it can include someone who was never a PRC citizen, such as citizens of Taiwan.17 A PRC-born person with citizenship in another country is also considered subject to the rules.18

Political uses for social credit’s implementing regulations might seem disconnected from the idea that credit records should create trust and encourage moral behaviour, but they are not. ‘Trust’ and ‘morality’ have dual meanings in the context of social credit. One side is focused on the reliability of an individual or entity, and the other on making the CCP’s position in power reliably secure. Trust and& morality serve their purpose only if they’re created on the party’s terms and if they produce reliability in the CCP’s capacity to govern. So the language itself promotes the party’s authority and control.

The market and legal data that make up a person’s or entity’s credit record is intrinsically political, while input sources can be simultaneously political and non-political.19 For instance, Article 8, Section 3 of the Civil Aviation Industry Credit Management Measures sanction individuals and entities for ‘a terrorist event’ or a ‘serious illegal disturbance’. Such disturbances could include safety incidents, such as a passenger opening an emergency exit door in a non-emergency.20 They could also include false terrorism charges against those considered political opponents, such as Uygurs (the CCP already uses false-charge tactics against individuals and NGOs).21 This year’s civil aviation cases are not an irregularity. Similar demands on companies have accumulated since January 2018. For instance, the Shanghai Administration for Industry and Commerce fined Japanese retailer Muji’s Shanghai branch 200,000 yuan (A$41,381) over packaging that listed Taiwan as a country.22 The fine cited a violation of Article 9, Section 4 of the PRC advertising law, which sanctions any activity ‘damaging the dignity or interests of the state or divulging any state secret’. The violation was then recorded on the National Enterprise Credit Information Publicity System.

The timing of these cases coincides with a regulation that took effect on 1 January 2018, under which every company with a business licence in China was required to have an 18-digit ‘unified social credit code’. Every company without a business licence designating its code was required to update its licence.23 Euphemistically, the code is to ‘improve administrative efficiency’.24 ‘Efficiency’ includes the meaning that any sanction against a company filed on the company’s credit record could trigger sanctions under other relevant legislation. Similar cases may multiply after 30 June 2018 because unified social credit codes will also be required for government-backed public institutions, social organisations, foundations, private non-enterprise units, grassroots self-governing mass organisations and trade unions.25

Generating ‘discourse power’ through data

An overlooked purpose of the SCS is to strengthen the PRC’s ‘discourse power’ or ‘right to speak’ (话语权).26 This can also be understood as the idea of creating the CCP’s narrative control. Discourse power is ‘an extension of soft power, relating to the influence and attractiveness of a country’s ideology and value system’.27 Discourse power allows a nation to shape and control its internal and external environments.

In the hands of political opponents, discourse power is a potential threat. According to the CCP, ‘hostile forces’ can incite and exploit economic and social disorder in other countries.28 This threat has been tied directly to leading international credit agencies—Moody’s Investors Service, Standard & Poor’s and Fitch Ratings—seen as potential threats to China. One article claimed that the agencies can ‘destroy a nation by downgrading their credit score, utilising the shock power of “economic nukes”’.29 Another article tied the problem to the One Belt, One Road scheme (Belt and Road Initiative, BRI), because participant countries accept the current international ratings system. For the CCP, the solution is to increase the ‘discourse power [that China’s] credit agencies possess on the international credit evaluation stage’.30 China’s SCS provides an alternative to the existing international credit ratings system. It does some similar things to the existing system, but is designed to give the Chinese state a more powerful voice in global governance. As we saw in the international airlines case, this louder voice is being used to exert influence on the operations of foreign companies.

Preventing the sort of credit crisis described above requires the CCP to have control over the narrative to prevent a political opponent from taking over the narrative—in other words, it requires the CCP to strengthen its ‘discourse power’. Discourse power is directly embedded in the trust and morality that social credit is supposed to create in Chinese society, and not only because trust and morality help with everyday social and economic problem solving. Trust and morality, in the way the Chinese state uses the terms, include as a core concept support for and adherence to CCP control and directions. This linkage can be traced at least as far back as an early 1980s propaganda effort related to ‘spiritual culture’, which responded to ‘popular disillusionment with the CCP’ and the promotion of Western politics as ‘superior’ to China’s.31

The concern only increased as China’s present day perception of threat was shaped by events such as Tiananmen in 1989, Kosovo in 1999, China’s entry into the World Trade Organization, and the ‘colour revolutions’ of the early 2000s. For instance, one article said that, despite mostly positive benefits from China entering the World Trade Organization, ‘Western civilisation-centred ideology, and aggressive Western culture can erode and threaten the independence and diversity of [China’s] national culture through excessive cultural exchanges.’ 32

One reason social credit contributes to strengthening the CCP’s discourse power is that the system relies on the collection and integration of data to improve the party’s awareness of its internal and external environments. In, 2010 Lu Wei described in great detail the meaning of ‘discourse power’ as referring not only to the ‘right to speak’, but also to guaranteeing the ‘effectiveness and power of speech’.33 He elaborated that for China to have discourse power requires both collection power and communication power. Collection power is the ability to ‘collect information from all areas in the world in real time’. Communication power, which ‘decides influence’, becomes stronger with more timely collection.

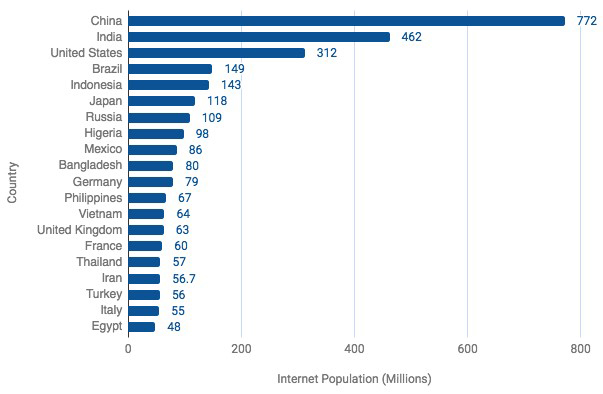

Data collection supporting China’s environmental awareness doesn’t stop at the country’s borders. Social credit requires real-time monitoring through big-data tools that can inform decision-making and the implementation of the credit system. In 2015, Contemporary World, a magazine affiliated with the International Liaison Department, published an article focused on big-data collection associated with the BRI.34 It said that data could be used to inform diplomatic and economic decision-making, as well as emergency mobilisation capacity. ‘Data courier stations’ within foreign countries would send data via back-ends to a centralised analysis centre in China. Data collection would come from legal information mining, such as information on the internet and database purchases, and from market operations. The data courier stations would include ‘e-commerce (platforms), Confucius Institutes, telecoms, transportation companies, chain hotels, financial payment institutions and logistics companies’.35

The collection method and use of data would differ according to the source. The most obvious and practical reason for data collection at Confucius Institutes is to support teaching. Eventually, the same data would inform decisions on cultural exchange (ostensibly using Confucius Institute databases).36 The objective of ‘cultural exchange’ isn’t merely soft power creation. As ‘discourse power’ suggests, the CCP views ‘language’ as a ‘non-traditional’ state security issue and a means of influencing other states, businesses, institutions and individuals. One publication on the BRI linked to the propaganda department explained that ethnic minorities in China ‘use similar languages to others outside of our borders and are frequently subjected to hostile forces outside of the border’. To reduce the ‘security risk’, ‘resource banks’ or ‘language talent’ projects would support the automatic translation of both Chinese and non-common ‘strategic languages’.37 Automatic translation would help to ‘detect instability in a timely manner, [assist] rapid response to emergencies, and exert irreplaceable intelligence values over the course of prevention, early warning and resolution of non-traditional security threats, in order to ensure national security and stability’.38

According to the Ministry of Education, automatic translation would be implemented through technologies such as big data, cloud computing, artificial intelligence and mobile internet. 39 This kind of technology already supports online teaching platforms affiliated with Confucius Institutes. They are at least partly reliant on technology from Chinese firm iFlytek. In addition to language learning software, iFlytek develops advanced surveillance for ‘public security’ and ‘national defence’, including voice recognition and keyword identification.40 Data collection and integration serve the purpose of increasing real-time situational awareness and simultaneously support the SCS’s discourse power objectives.

Technology, social management and economic development

The CCP saw crises such as the colour revolutions in Central Asia and Europe as illustrations of potential risks to its own power in China. Increasing the party’s discourse power has been justified as one response. The CCP’s perception of its exposure to risk increased with events such as the milk powder scandal in 2008 and the SARS outbreak between 2002 and 2003.41 Each crisis revealed significant problems with the PRC’s crisis prevention and response capacity due to a combination of political, logistical and technical faults.42 The SCS is part of an attempt to address those faults and to prevent the party’s competence or legitimacy from being questioned.

An innocuous line in the Social Credit Plan called for ‘the gradual establishment of a national commodity circulation (supply chain) traceability system based on barcodes and other products’.43 Barcodes are commonly used in supply-chain management to improve product traceability. ‘Other products’ include radio-frequency identification (RFID), which is also used for supply-chain management. RFID is an electronic tagging technology, readable through sensors or satellites, that ‘would gradually replace barcodes in the era of the internet of things’.

Most narrowly and directly, ‘barcodes and other products’ will help to manage food safety and health risks. The integration of information, supported by technology, facilitates risk identification. As technology’s ability to effectively identify risks improves, the government would be able to improve the regulation of behaviours that heighten ‘risk’, as defined and perceived by the CCP. As a result, potentially destabilising crises can be prevented through the optimisation of everyday governance tasks.

In future, the technologies used for supply-chain management will form an integral part of China’s development of ‘smart cities’. Smart cities in China harness ‘internet of things’ technology in support of resource optimisation and service allocation for both economic development and social management. A plan for standardising smart cities in China said that data mining using chips, sensors, RFID and cameras contributes to processes such as ‘identification, information gathering, surveillance and control’ of infrastructure, the environment, buildings and security within a city.44 Data mining covers such areas as ‘automatic analysis, classification, summarization, discovery and description of data trends’, and can be applied to decision-making about a city’s ‘construction, development and management’.45

All of these things contribute to building the capacity to make decisions and prevent threats from emerging by early intervention. Social credit will require big-data integration and data recording through information systems. Real-time decision-making capabilities are central to the success of the monitoring and assessment systems discussed in the Social Credit Plan, particularly in areas such as traffic management and e-commerce. Decision-making is enabled through ‘decision support systems’, which provide support for complex decision-making and problem solving.46 In China, present-day research emerges from a field called ‘soft science’ (软科学) that developed in the 1980s.47

Soft science is defined in China as a ‘system of scientific knowledge sustaining democratic and scientific decision-making’ that can be used in China to ‘ensure the correctness of our decision-making and the efficacy of our execution.’48 Correctness has as much a political meaning as its more usual one.

The use of decision support systems directly contributes to mechanisms for crisis prevention and response planning. Technologies such as barcodes and RFID are found in the logistical mobilisation strategies of many countries, not just the PRC. In China, however, civilian resources are multi-use, with simultaneous economic and social development and political control functions. The same systems support mobilisations for crises. At a study session on a speech that Xi Jinping gave at the 13th National People’s Congress, delegates from the People’s Liberation Army and People’s Armed Police learned about ‘infrastructure construction and resource sharing’. Efforts to improve those areas would support a ‘coordinated development of social services and military logistics’, while utilising various strategic resources and strength in areas such as politics, the economy, the military, diplomacy and culture. 49

This integration of technology with social management, political control and economic development brings back into focus the concept of discourse power. Like the other aspects of social credit, those systems don’t stop at China’s borders. As part of the BRI, China plans to leverage smart cities, and technologies such as 5G, to ‘create an information superhighway’. 50 Combined with channels for information collected from projects ranging from logistics to e-commerce or Confucius Institutes, information can be integrated to support social credit objectives such as increased discourse power.

Future challenges and recommendations

How social credit will exactly develop is not entirely known because the system itself is a multi-stage, multi-decade project. In order to deal with the international consequences of social credit, foreign governments must act now while also applying long-term strategic thought and commitment to dealing with the international elements of this system. Although China’s development of the SCS can’t be stopped, its progress can be delayed and the system’s coercive aspects reduced while better solutions for dealing with the problem are found.

Recommendation 1: Control the export of Western technologies and research already used in—and potentially useful to—the Chinese state’s SCS.

Recommendation 2: Review emerging and strategic technologies, paying particular attention to university and research institute partnerships.

Controlling the export of Western technology is a key short-term solution. Governments should review strategic and emerging technologies that are already or could be used in the SCS. Universities and research organisations partnering with Chinese counterparts and contributing to the development or implementation of the CCP’s SCS should be included in this review. Universities can’t be blind to the impact and end uses of research that they conduct or contribute to with overseas partners. Besides the clear political and social control purposes, contributing to such a system also doesn’t align well with the ethical framework for most Western universities’ research; nor is it good for their global reputations. The findings of such reviews should help Western governments determine where to control access and what legislation is therefore appropriate.

Obvious starting points would be preventing situations such as, for example, the University of Technology Sydney’s Global Big Data Technologies Centre accepting $20 million from the state-owned defence enterprise China Electronics Technology Group Corporation (CETC).51 CETC is one of the key state-owned enterprises behind China’s increasingly sophisticated video surveillance apparatus, including facial recognition systems and scanners. One of University of Technology Sydney’s most recent 2018 CETC-funded projects is in fact research on a ‘public security online video retrieval system’.52 Another example that highlights policy gaps is the recently reported case in which surveillance technology developed by Duke University and originally intended for the US Navy was sold into China with ‘clearance from the US State Department’ because the technology failed to secure backing in the US.53

Recommendation 3: Strengthen democratic resilience to counter foreign interference.

At least part of the solution requires acknowledgement that the spread of social credit beyond China’s borders takes advantage of easily exploitable weaknesses. The problems are compounded when a government opposed to liberal democratic values and institutions exploits those weaknesses. Australia’s foreign interference law could provide a framework for other countries looking to deal with the problem via legislation, as increased transparency is a foundation for an informed response.

Recommendation 4: Fund research to identify dual-purpose technologies and data collection systems.

While it isn’t a complete solution, funding research that contributes to greater transparency and public debate about China’s SCS is very important. Understanding what the Chinese state is doing, and what the implications are for other countries, requires asking the right questions. The problem is not just technology per se, but the ways in which processes and information are used to feed into and support the SCS, as well as other technology-enabled methods of control.

Recommendation 5: Governments and entities must strengthen data protection.

A crucial step is to limit the way data can be exported, used and stored overseas. Auditing should be conducted to ensure that any breaches are detected and to identify loopholes. For example, in the case of Confucius Institutes mentioned above, any data collected for any purpose should be stored using university-owned hardware and software, and only in university-operated databases. In the case of any violations, the university’s obligations to protect privacy and personal data on individuals that it holds should be enforced.

Recommendation 6: New legislation should reflect that this is also a human rights issue.

China’s SCS is not only an issue of political influence and control internationally. It’s also a human rights issue, and new legislation should reflect that. Through contributions to smart cities development in China, for example, Western companies are providing support to build a system that has multiple uses, including uses that are responsible for serious human rights violations. The US’s Global Magnitsky Act is an example of the type of legislation that could be used to hold companies and entities accountable for—willingly or not—enabling the Chinese party-state’s human rights violations.

Recommendation 7: Support companies threatened by China’s social credit system

Western governments need to more actively and publicly support the private sector in mitigating risks that are created by the SCS. This should include collective counter-measures that impose costs for coercive acts.

Recommendation 8: Overseas Chinese communities must be protected from social credit’s overseas expansion.

Western governments must take steps to protect overseas Chinese from the kinds of CCP encroachment that have taken place for decades but that are now increasingly augmented through a functioning and tech-enabled SCS. Democratic governments must ensure that they legislate against the implementation and use of China’s SCS across and within their borders.

Acknowledgements

The author would like to thank Danielle Cave, Didi Kirsten Tatlow, Dimon Liu, Gregory Walton, Kitsch Liao, Nigel Inkster, Peter Mattis, Fergus Ryan and Rogier Creemers, as well as the Mercator Institute for China Studies. Disclaimer: All views and opinions expressed in this article are the author’s own, and do not necessarily reflect the position of any institution with which she is affiliated.

Important disclaimer

This publication is designed to provide accurate and authoritative information in relation to the subject matter covered. It is provided with the understanding that the publisher is not engaged in rendering any form of professional or other advice or services. No person should rely on the contents of this publication without first obtaining advice from a qualified professional person.

© The Australian Strategic Policy Institute Limited 2018

This publication is subject to copyright. Except as permitted under the Copyright Act 1968, no part of it may in any form or by any means (electronic, mechanical, microcopying, photocopying, recording or otherwise) be reproduced, stored in a retrieval system or transmitted without prior written permission. Enquiries should be addressed to the publishers.

- Samantha Hoffman, ‘Managing the state: social credit, surveillance and the CCP’s plan for China’, China Brief, Jamestown Foundation, 17 August 2017, 17(11), online. ↩︎

- Concepts summarised in this paper, including on social management, pre-emptive control, social credit and the ‘spiritual civilisation’, crisis response and threat perceptions, are drawn from my PhD thesis: Samantha Hoffman, ‘Programming China: the Communist Party’s autonomic approach to managing state security’, University of Nottingham, 29 September 2017. ↩︎

- China Civil Aviation Administration General Division, ‘关于限期对官方网站整改的通知’ (‘Notice Relating to Rectification of the Official Website within a Specified Timeframe’), 25 April 2018; James Palmer, Bethany Allen-Ebrahimian, ‘China threatens US airlines over Taiwan references’, Foreign Policy, 27 April 2018, online; Josh Rogin, ‘White House calls China’s threats to airlines “Orwellian nonsense”’, The Washington Post, 5 May 2018, online. ↩︎

The two key guidances directly referred to in the opening of the Civil Aviation Industry Credit Management Measures (Trial Measures) are the Planning Outline for the Construction of a Social Credit System (2014–2020) and 关于印发《民航行业信用管理办法(试行) 》的通知 (Civil Aviation Industry Credit Management Measures (Trial Measures)), 7 November 2017. ↩︎

The reality of the world we live in today is one in which cyber operations are now the norm. Battlefields no longer exist solely as physical theatres of operation, but now also as virtual ones. Soldiers today can be armed not just with weapons, but also with keyboards. That in the modern world we have woven digital technology so intricately into our businesses, our infrastructure and our lives makes it possible for a nation-state to launch a cyberattack against another and cause immense damage — without ever firing a shot.

The reality of the world we live in today is one in which cyber operations are now the norm. Battlefields no longer exist solely as physical theatres of operation, but now also as virtual ones. Soldiers today can be armed not just with weapons, but also with keyboards. That in the modern world we have woven digital technology so intricately into our businesses, our infrastructure and our lives makes it possible for a nation-state to launch a cyberattack against another and cause immense damage — without ever firing a shot.

Even though it may use the same tools and techniques, cyber espionage, by contrast, is explicitly designed to gather intelligence without having an effect—ideally without detection. The Global Commission on the Stability of Cyberspace has commissioned ASPI’s International Cyber Policy Centre to do further work on defining offensive cyber capabilities.

Even though it may use the same tools and techniques, cyber espionage, by contrast, is explicitly designed to gather intelligence without having an effect—ideally without detection. The Global Commission on the Stability of Cyberspace has commissioned ASPI’s International Cyber Policy Centre to do further work on defining offensive cyber capabilities.