More than innovation: Australia needs fast, low-cost defence production

Conflicts in Ukraine and the Middle East have shown that mass and asymmetry characterise modern warfare. The challenge is to deliver affordable mass—weapons in great numbers—while ensuring technology is evolving ahead of rapidly changing threats. This is both a hardware and software challenge.

We rightly applaud when an innovative prototype is delivered in record time, but that is only half the battle won. The rubber hits the road in the defence sector when innovations are efficiently and inexpensively produced at-scale to deliver an unexpected asymmetric massed effect to defeat an adversary or disrupt its decision calculus.

In releasing Defence’s innovation, science and technology strategy, Accelerating Asymmetric Advantage—Delivering More, Together, Chief Defence Scientist Tanya Monro in September called on the defence industrial base to help deliver credible, potent and future-ready technology.

The question industry must confront is, how do we evolve our thinking, processes and manufacturing to answer this call? Fundamentally, the entire acquisition process and industrial approach need to be redesigned. They need to embrace modern manufacturing processes with the scale necessary for this era. From concept to prototype, to hardware and software integration, to supply chain and manufacturing systems, industry must be much more agile and use viable commercial processes that offer abundant and readily available materials.

The gold-plated processes of the legacy defence industry are optimised for reliability and exquisiteness, not mass and efficiency. To truly deliver asymmetric advantage to the warfighter we must do things in bold and unconventional ways. That will take vision, creativity and courage.

Manufacturing at scale is hard. Historically, it’s not been an Australian strength, in fact, we have a history of thinking that the invention of new things is the end of the innovation process. The reality is that at-scale manufacturing is 1000 times harder than innovation. The industrial redesign needs to be properly funded and become the end point of Australia’s sovereign manufacturing strategy.

We have seen tremendous examples of Australian ingenuity, with several companies making great progress in developing cheap, responsive and high-rate defence capabilities for Australian and global markets.

In 2023 SYPAQ won the Australian Financial Review’s 2023 Most Innovative Companies Award for its Corvo Precision Payload Delivery System—a flat packed, easily assembled, easily operated, low cost, expendable drone now used on the Ukraine battlefield.

Nulka, a defence system developed by Australian scientists, is one of Australia’s most successful defence exports. It’s been in operation with the Royal Australian Navy and the US Navy for more than 20 years. Now made by BAE Systems, it’s a leading example of innovation progressing to scale and success across global markets

Australia doesn’t have the privilege of investing endlessly in innovative ideas without progressing to scale production. We need to invest in the right innovations, those that demonstrate ability to go from ideas to production to scale rapidly.

Another example is the US company Anduril, which has an Australian subsidiary of which I am executive chairman and chief executive. Everything Anduril builds is focused on large scale deployment. We ensure products are ready for manufacture from the get-go by designing the production system and the product simultaneously. So, when we have our first prototype of the product, we also know what the production system looks like. We have a full digital twin of the production system, a virtual representation of it. So, when we are ready to scale up production, we already understand what high-rate manufacturing will look like and how to do it efficiently. Our factories and products are modelled on high-rate automotive and consumer technology that delivers mass at a cost-effective price point.

For example, Anduril’s Barracuda (a family of autonomous-aircraft designs, including a cruise missile) is designed for low-cost, hyper-scale production. A Barracuda takes 50 percent less time to produce, requires 95 percent fewer tools and 50 percent fewer parts than competing products on the market today. The fuselage is made using hot pressing, similar to the method used for producing acrylic bathtubs. It’s incredibly cheap and broadly available.

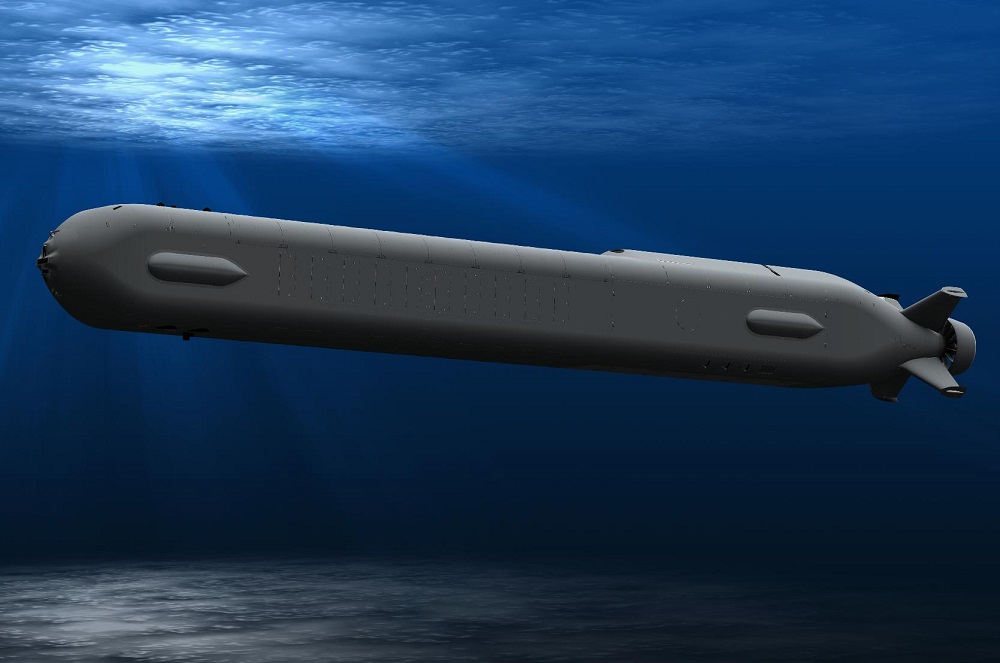

We have used this approach in our Australian Ghost Shark program for an autonomous submarine, working with Australian suppliers to create a world-class massed production system. We are not just building three Ghost Shark prototypes. Each prototype is an opportunity to rapidly incorporate what we’re learning. The modular design and software-first approach allow accelerating improvements to be attained in military service. The agility in the design allows payloads to be rapidly switched in and out for different roles, This is game changing and could bring a battle-winning advantage to the ADF.

Our asymmetric approach is also being baked into our Australian supply chain. It’s modernising and scaling with us. Our suppliers are installing advanced machinery and AI-powered robotics. We are learning together fast.

Examples like SYPAQ and Anduril show the potential for at-scale manufacturing in Australia to deliver disruptive miliary value—potentially for allies through exports.

The National Defence Strategy 2024 emphasises the notion of human-machine teaming, in which sophisticated crewed systems are combined with massed autonomy. Australia must be capable of large-scale manufacturing of autonomous systems to deliver asymmetric, low-cost massed effects.